Context- not just the data- is the Real Revolution

Before we talk about artificial intelligence replacing jobs or transforming society, it’s worth pausing to understand what actually changed.

The shift is not that machines can talk.

The shift is that machines can respond differently depending on context and scale that ability across millions of interactions simultaneously.

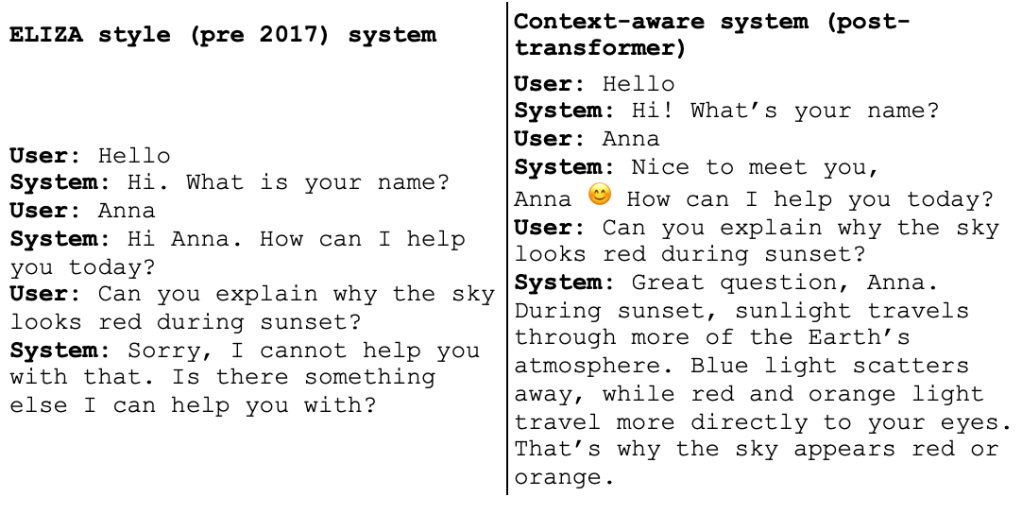

To see the contrast, consider a simplified comparison:

About two decades ago, when I first used a home computer, AI felt like a gimmick. Chatbots were basic software- it would ask:

“What is your name?”, to which you would type in your name … and you got a programmed response. It didn’t really understand your question. It just followed the rules someone wrote into its code. It was a pretty static system.

About a decade later, in 2017, a team of researchers at Google Brain and Google Research published a research paper “Attention Is All You Need”, introducing the Transformer. This design removed older constraints and opened the door to a different way of processing long sequences of data (including language), one that scales, and for lack of a better word, understands the context better, and serves as the backbone of many modern generative AI systems. No more static responses- the computers finally had the processing power and the architecture to respond in a rich, context-aware way. I had been experimenting with early versions of these tools during my PhD, before the public launch of ChatGPT, and even back then they seemed almost too powerful to be true. Latter when ChatGPT launched in November 2022, it didn’t feel like a typical product launch- it felt like a moment. Millions of people around the world suddenly had access to a powerful conversational interface built on generative pre-trained transformers.

This article is not about whether AI will replace humans. Nor is it a technical deep dive. Instead, it’s a stage-setter, an attempt to outline the real shift we’re living through, and why this matters across domains.

AI does not understand, but simulates understanding (and it is pretty good at it)

Let’s begin with an essential distinction:

AI does not understand.

It simulates understanding.

Large language models don’t possess consciousness, emotions, or intentions. What they do is generate responses that statistically align with patterns learned during training- the patterns of language, relationships, and structure.

This simulation of intelligence is what makes them feel smart. This is not human understanding, but rather a context-aware simulation, and this is a leap from the rigid, rule-based responses of early software.

Context as the Axis of Intelligence

Context has always mattered in human interactions, reasoning and decision making- we intuitively adjust our interpretation based on situation, background knowledge, and subtle cues.

What is new is this:

Machines can now model relational context at scale.

Scaling context means modeling relationships across inputs in a way that adapts output without explicit reprogramming for each scenario.

They can ingest large inputs, weigh relationships across them, and generate responses dynamically.

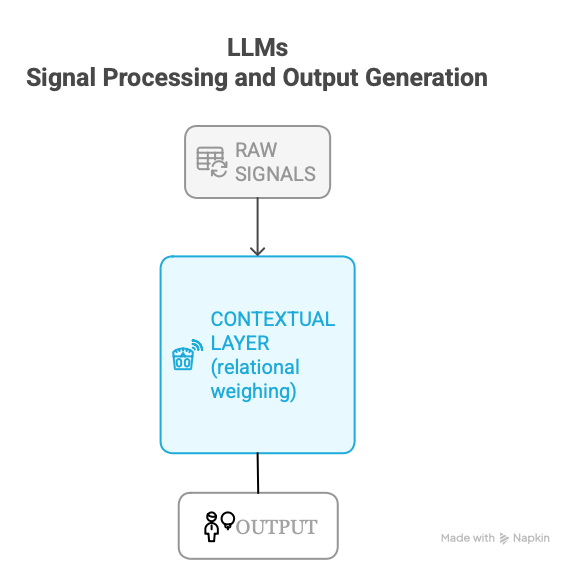

Figure 1: Core Context Scaling Concept

Attention-based models compute relevance across all inputs simultaneously, enabling scalable contextual reasoning. This differs from fixed rule bases or linear decision paths.

This is not merely a faster model. It’s a paradigm shift in how machines relate to information. Modern AI doesn’t follow predetermined steps; it weights relationships between inputs dynamically, tailoring its output to the specifics of the moment.

Again, this is not human understanding, but it is an impressive functional approximation that behaves as if it were contextual.

Why This Matters Across Domains

At its heart, the change isn’t about having access to more information. It’s about handling relationships among information at scales that humans, unaided, cannot manage easily.

Due to its scalibility and magnitude, this shift- from static systems to context-sensitive ones is not limited to any single domain. It’s reshaping the workflows, how we think, work, and by extention competive forces in the open market, and on how the sucess is measured across industries.

A Short Tour of Impact Areas

Consider this lean illustration of how this plays out without excessive enumeration:

Learning & Knowledge Work

Instead of memorizing static frameworks, learners interact with information dynamically. Dialogue replaces monologue. Feedback becomes immediate.

Decision Support

Rather than fixed rules steering choices, AI offers weighted suggestions that reflect patterns across a rich input space.

Analysis & Synthesis

The manual burden of modeling and pattern recognition shifts. Human judgment in interpreting, verifying, and deciding remains central, but the space of possibilities expands.

What unifies these shifts? In all these areas, the underlying transformation is the same:

Context is no longer local and fixed- it is relational and dynamic.

Machines simulate this at scale. Humans interpret it with judgment.

From Scarcity to Adaptive Abundance

We are moving from a world where knowledge was scarce and static, to one where knowledge is abundant and adaptive.

The bottleneck in this evolving landscape seems to be more and more about the ability of human judgement, and decision. This landscape is where the human judgment, wisdom, curiosity matters the most (and is likely to matter more as systems evolve)

Final Thought

We are not hurtling toward an uncanny human-like machine consciousness. Instead, we are living through an evolution in how information is structured and used.

That evolution is unlikely to make humans obsolete- but it does change the nature of human work across domains. The skill of the future may not be what you know, but how well you frame, interpret, and integrate information in relationship with other information.

Leave a comment